Webinar Recap: De-risking Your Microsoft Copilot Deployment

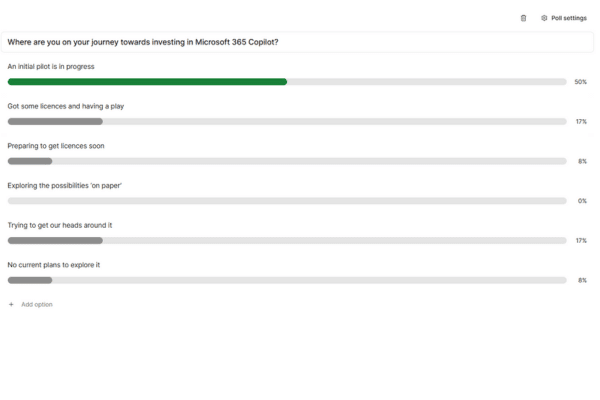

In our recent webinar, we delved into the world of AI governance, data security, and the deployment of Microsoft Copilot. To get a sense of where everyone was in their AI journey, we kicked off with a Slido poll. It was interesting to see that our audience was at various stages, from initial pilots to no current plans at all.

Responsible AI Standards and Shared Responsibility Model

Our Compliance Lead, Nivasha, took the floor to discuss the shared responsibility model of Microsoft 365. She stressed how important it is for organisations to have their own controls for data governance, security, and compliance. She also talked about the need for robust policies, training, and compliance oversight to ensure that AI is used responsibly and ethically. Nivasha highlighted Microsoft’s Responsible AI Standard and Copilot Copyright Commitment, which aim to ensure ethical AI use and protect intellectual property.

AI governance and the regulatory landscape

We then shifted gears to talk about the evolving AI governance and regulatory landscape, covering:

- EU AI Act: The European Union has proposed the AI Act, which aims to regulate AI systems based on their risk levels. The Act categorizes AI systems into four risk levels: unacceptable risk, high risk, limited risk, and minimal risk. High-risk AI systems will be subject to stringent requirements, including transparency, accountability, and data governance measures.

- ISO 42001: The International Organization for Standardization (ISO) has introduced ISO 42001, an artificial intelligence management standard. This standard provides guidelines for managing AI systems, focusing on transparency, governance, data privacy, security, and fairness.

- NIST AI Risk Management Framework: The National Institute of Standards and Technology (NIST) has released a framework for managing AI risks. This framework emphasises the importance of transparency, accountability, and risk-based approaches to AI governance.

Promoting user accountability was another key topic Nivasha covered. She talked about the importance of having governance controls, including policies, training, and compliance oversight, to ensure responsible and ethical use of AI. She also stressed the need for measures to control access to AI tools, ensuring that only trained and aware employees can use them.

Identity management and security

Johann, Cloud Essentials’ Managing Director SA and technical Microsoft expert, then discussed the importance of identity management and security in deploying Microsoft Copilot. He highlighted the use of sensitivity labels and data loss prevention policies to protect sensitive information. Johann introduced Microsoft’s Data Security Posture Management (DSPM) solution, which helps organisations monitor and manage AI use, providing insights and recommendations to address risks.

Gethin, our Key Account Specialist, wrapped up the session with a summary of quick wins for deployment and governance. He emphasised the importance of context-aware detection, dynamic controls, and continuous improvement in data governance.

Practical Tips for AI Governance:

- Develop Comprehensive Policies: Create and implement AI policies that address transparency, data privacy, fairness, and accountability. Ensure these policies are regularly reviewed and updated.

- Establish a Governance Panel: Form a governance panel or task force with stakeholders from across the organization to oversee AI usage and ensure compliance with regulatory obligations.

- Conduct Regular Audits: Perform regular audits and assessments of AI systems to identify and mitigate biases, ensure compliance with ethical standards, and address potential risks.

- Implement Data Protection Measures: Ensure robust data protection measures are in place to safeguard personal and sensitive data used by AI systems. This includes encryption, access controls, and data anonymisation techniques.

- Adopt a Risk-Based Approach: Focus on identifying and mitigating potential risks associated with AI technologies. Prioritise high-risk AI systems and implement appropriate controls to manage these risks.